This is the documentation for the Nextflow online tutorial that will take place on the framework of the 'Reproducible genomics workflows using Nextflow and nf-core' workshop organized by BovReg and the CRG.

The aim of this guide is to cover a vast part of Nextflow features ranging from basic to advanced language aspects.

1. Setup

1.1. Requirements

Nextflow can be used on any POSIX compatible system (Linux, OS X, etc). It requires Bash and Java 8 (or later) to be installed.

Optional requirements:

-

Docker engine 1.10.x (or later)

-

Singularity 2.5.x (or later, optional)

-

Conda 4.5 (or later, optional)

-

Graphviz (optional)

-

AWS CLI (optional)

-

AWS Cloud 9 environment properly configured (optional)

1.2. Training materials download

Once you launched the environment, download the training materials running the command below on the terminal window that you will find at the botton of the page:

aws s3 sync s3://cbcrg-eu/nf-training-bovreg .|

Don’t miss the ending dot in the above command. |

We recommend to follow this tutorial using the AWS Cloud 9 environment. However, if you want to use your machine you can

alsodownload all the materials using curl:

curl https://cbcrg-eu.s3-eu-west-1.amazonaws.com/nf-training-bovreg/nf-training-bovreg.tar.gz | tar xvzOr alternatively if you have wget instead of curl in your machine:

wget -q -O- https://cbcrg-eu.s3-eu-west-1.amazonaws.com/nf-training-bovreg/nf-training-bovreg.tar.gz | tar xvz1.3. Environment setup

To complete the environment setup you just need to run the script below, which installs Java an other requirements for this tutorial.

source setup/all.sh1.4. Nextflow Installation

Install the latest version of Nextflow copy & pasting the following snippet in a terminal window:

curl https://get.nextflow.io | bash

mv nextflow ~/binCheck the correct installation running the following command:

nextflow info2. Get started with Nextflow

2.1. Basic concepts

Nextflow is workflow orchestration engine and a programming domain specific language (DSL) that eases the writing of data-intensive computational pipelines.

It is designed around the idea that the Linux platform is the lingua franca of data science. Linux provides many simple but powerful command-line and scripting tools that, when chained together, facilitate complex data manipulations.

Nextflow extends this approach, adding the ability to define complex program interactions and a high-level parallel computational environment based on the dataflow programming model. Nextflow core features are:

-

enable workflows portability & reproducibility

-

simplify parallelization and large scale deployment

-

easily integrate existing tools, systems & industry standards

2.1.1. Processes and channels

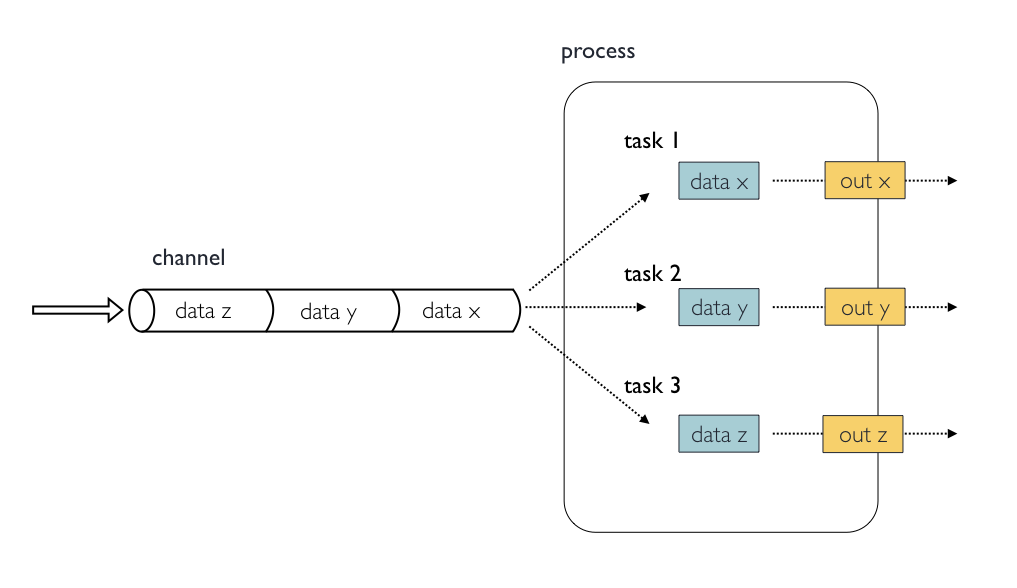

In practice a Nextflow pipeline script is made by joining together different processes. Each process can be written in any scripting language that can be executed by the Linux platform (Bash, Perl, Ruby, Python, etc.).

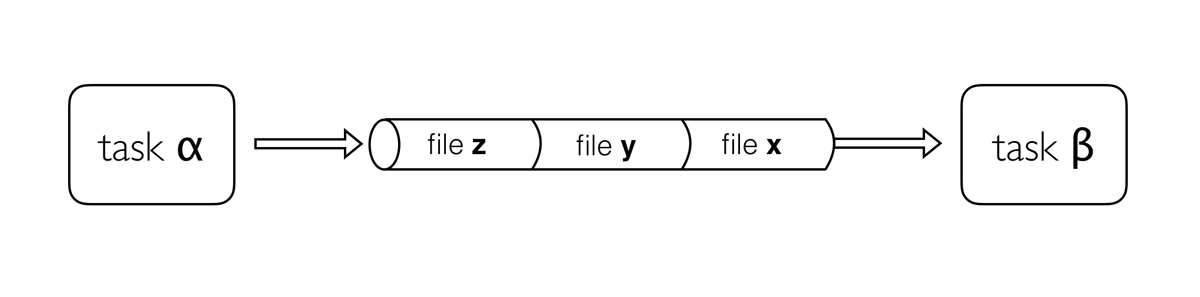

Processes are executed independently and are isolated from each other, i.e. they do not share a common (writable) state. The only way they can communicate is via asynchronous FIFO queues, called channels in Nextflow.

Any process can define one or more channels as input and output. The interaction between these processes, and ultimately the pipeline execution flow itself, is implicitly defined by these input and output declarations.

2.1.2. Execution abstraction

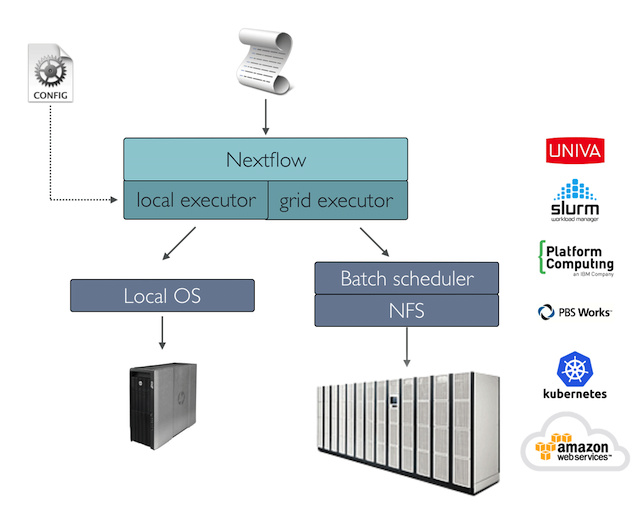

While a process defines what command or script has to be executed, the executor determines how that script is actually run in the target system.

If not otherwise specified, processes are executed on the local computer. The local executor is very useful for pipeline development and testing purposes, but for real world computational pipelines an HPC or cloud platform is often required.

In other words, Nextflow provides an abstraction between the pipeline’s functional logic and the underlying execution system. Thus it is possible to write a pipeline once and to seamlessly run it on your computer, a grid platform, or the cloud, without modifying it, by simply defining the target execution platform in the configuration file.

It provides out-of-the-box support for major batch schedulers and cloud platforms:

-

Grid engine (Open/Sun/Univa)

-

IBM Platform LSF

-

Linux SLURM

-

PBS Works

-

Torque

-

Moab

-

HTCondor

-

Amazon Batch

-

Google Life Sciences

-

Kubernetes

2.1.3. Scripting language

Nextflow implements declarative domain specific language (DSL) simplifies the writing of writing complex data analysis workflows as an extension of a general purpose programming language.

This approach makes Nextflow very flexible because allows to have in the same computing environment the benefit of concise DSL that allow the handling of recurrent use cases with ease and the flexibility and power of a general purpose programming language to handle corner cases, which may be difficult to implement using a declarative approach.

In practical terms Nextflow scripting is an extension of the Groovy programming language, which in turn is a super-set of the Java programming language. Groovy can be considered as Python for Java in that is simplifies the writing of code and is more approachable.

2.2. Your first script

Copy the following example into your favourite text editor and save it to a file named hello.nf :

#!/usr/bin/env nextflow

params.greeting = 'Hello world!'

greeting_ch = Channel.from(params.greeting)

process splitLetters {

input:

val x from greeting_ch

output:

file 'chunk_*' into letters

"""

printf '$x' | split -b 6 - chunk_

"""

}

process convertToUpper {

input:

file y from letters.flatten()

output:

stdout into result

"""

cat $y | tr '[a-z]' '[A-Z]'

"""

}

result.view{ it.trim() }This script defines two processes. The first splits a string into files containing chunks of 6 characters. The second receives these files and transforms their

contents to uppercase letters. The resulting strings are emitted on the result channel and the final output is printed by the view operator.

Execute the script by entering the following command in your terminal:

nextflow run hello.nfIt will output something similar to the text shown below:

N E X T F L O W ~ version 20.10.0

Launching `hello.nf` [infallible_wilson] - revision: db17b351c4

executor > local (3)

[ca/361e5c] process > splitLetters (1) [100%] 1 of 1 ✔

[5a/44a867] process > convertToUpper (1) [100%] 2 of 2 ✔

HELLO

WORLD!You can see that the first process is executed once, and the second twice. Finally the result string is printed.

It’s worth noting that the process convertToUpper is executed in parallel, so there’s no guarantee that the instance

processing the first split (the chunk Hello) will be executed before the one processing the second split (the chunk world!).

Thus, it is perfectly possible that you will get the final result printed out in a different order:

WORLD!

HELLO|

The hexadecimal numbers, like |

2.3. Modify and resume

Nextflow keeps track of all the processes executed in your pipeline. If you modify some parts of your script, only the processes that are actually changed will be re-executed. The execution of the processes that are not changed will be skipped and the cached result used instead.

This helps a lot when testing or modifying part of your pipeline without having to re-execute it from scratch.

For the sake of this tutorial, modify the convertToUpper process in the previous example, replacing the process script with the string

rev $y, so that the process looks like this:

process convertToUpper {

input:

file y from letters.flatten()

output:

stdout into result

"""

rev $y

"""

}Then save the file with the same name, and execute it by adding the -resume option to the command line:

nextflow run hello.nf -resumeIt will print output similar to this:

N E X T F L O W ~ version 20.10.0

Launching `hello.nf` [gloomy_shockley] - revision: 2a9917d420

executor > local (2)

[ca/361e5c] process > splitLetters (1) [100%] 1 of 1, cached: 1 ✔

[56/522eae] process > convertToUpper (1) [100%] 2 of 2 ✔

olleH

!dlrowYou will see that the execution of the process splitLetters is actually skipped (the process ID is the same), and its results are retrieved from the cache. The second process is executed as expected, printing the reversed strings.

|

The pipeline results are cached by default in the directory $PWD/work. Depending on your script, this folder can take of lot of disk space. If your are sure you won’t resume your pipeline execution, clean this folder periodically. |

2.4. Pipeline parameters

Pipeline parameters are simply declared by prepending to a variable name the prefix params, separated by dot character.

Their value can be specified on the command line by prefixing the parameter name with a double dash character, i.e.

--paramName

For the sake of this tutorial, you can try to execute the previous example specifying a different input string parameter, as shown below:

nextflow run hello.nf --greeting 'Bonjour le monde!'The string specified on the command line will override the default value of the parameter. The output will look like this:

N E X T F L O W ~ version 20.10.0

Launching `hello.nf` [distracted_hilbert] - revision: 2a9917d420

executor > local (4)

[82/18a151] process > splitLetters (1) [100%] 1 of 1 ✔

[a5/189102] process > convertToUpper (1) [100%] 3 of 3 ✔

uojnoB

m el r

!edno3. Channels

Channels are a key data structure of Nextflow that allows the implementation of reactive-functional oriented computational workflows based on the Dataflow programming paradigm.

They are used to logically connect tasks each other or to implement functional style data transformations.

3.1. Channel types

Nextflow distinguish two different kinds of channels: queue channels and value channels.

3.1.1. Queue channel

A queue channel is a asynchronous unidirectional FIFO queue which connects two processes or operators.

-

What asynchronous means? That operations are non-blocking.

-

What unidirectional means? That data flow from a producer to a consumer.

-

What FIFO means? That the data is guaranteed to be delivered in the same order as it is produced.

A queue channel is implicitly created by process output definitions or using channel factories methods such as Channel.from or Channel.fromPath.

Try the following snippets:

ch = Channel.from(1,2,3)

println(ch) (1)

ch.view() (2)| 1 | Use the built-in println function to print the ch variable. |

| 2 | Apply the view method to the ch channel, therefore prints each item emitted by the channels. |

Exercise

Try to execute this snippet, it will produce an error message.

p = Channel.from(1,2,3)

p.view()

p.view()| A queue channel can have one and exactly one producer and one and exactly one consumer. |

3.1.2. Value channels

A value channel a.k.a. singleton channel by definition is bound to a single value and it can be read unlimited times without consuming its content.

ch = Channel.value('Hello')

ch.view()

ch.view()

ch.view()It prints:

Hello

Hello

Hello3.2. Channel factories

3.2.1. value

The value factory method is used to create a value channel. An optional not null argument

can be specified to bind the channel to a specific value. For example:

ch1 = Channel.value() (1)

ch2 = Channel.value( 'Hello there' ) (2)

ch2 = Channel.value( [1,2,3,4,5] ) (3)| 1 | Creates an empty value channel. |

| 2 | Creates a value channel and binds a string to it. |

| 3 | Creates a value channel and binds a list object to it that will be emitted as a sole emission. |

3.2.2. from

The factory Channel.from allows the creation of a queue channel with the values specified as argument.

ch = Channel.from( 1, 3, 5, 7 )

ch.view{ "value: $it" }The first line in this example creates a variable ch which holds a channel object. This channel emits the values specified as a parameter in the from method. Thus the second line will print the following:

value: 1 value: 3 value: 5 value: 7

Method Channel.from will be deprecated and replaced by Channel.of (see below)

|

3.2.3. of

The method Channel.of works in a similar manner to Channel.from, though it fixes some inconsistent behavior of the latter and provides a better handling for range of values. For example:

Channel

.of(1..23, 'X', 'Y')

.view()3.2.4. fromList

The method Channel.fromList creates a channel emitting the elements provided by a list objects specified as argument:

list = ['hello', 'world']

Channel

.fromList(list)

.view()3.2.5. fromPath

The fromPath factory method create a queue channel emitting one or more files

matching the specified glob pattern.

Channel.fromPath( '/data/big/*.txt' )This example creates a channel and emits as many items as there are files with txt extension in the /data/big folder. Each element is a file object implementing the Path interface.

Two asterisks, i.e. **, works like * but crosses directory boundaries. This syntax is generally used for matching complete paths. Curly brackets specify a collection of sub-patterns.

|

| Name | Description |

|---|---|

glob |

When |

type |

Type of paths returned, either |

hidden |

When |

maxDepth |

Maximum number of directory levels to visit (default: |

followLinks |

When |

relative |

When |

checkIfExists |

When |

Learn more about the blog pattern syntax at this link.

Exercise

Use the Channel.fromPath method to create a channel emitting all files with the suffix .fq in the data/ggal/ and any subdirectory, then print the file name.

3.2.6. fromFilePairs

The fromFilePairs method creates a channel emitting the file pairs matching a glob pattern provided by the user. The matching files are emitted as tuples in which the first element is the grouping key of the matching pair and the second element is the list of files (sorted in lexicographical order).

Channel

.fromFilePairs('/my/data/SRR*_{1,2}.fastq')

.view()It will produce an output similar to the following:

[SRR493366, [/my/data/SRR493366_1.fastq, /my/data/SRR493366_2.fastq]]

[SRR493367, [/my/data/SRR493367_1.fastq, /my/data/SRR493367_2.fastq]]

[SRR493368, [/my/data/SRR493368_1.fastq, /my/data/SRR493368_2.fastq]]

[SRR493369, [/my/data/SRR493369_1.fastq, /my/data/SRR493369_2.fastq]]

[SRR493370, [/my/data/SRR493370_1.fastq, /my/data/SRR493370_2.fastq]]

[SRR493371, [/my/data/SRR493371_1.fastq, /my/data/SRR493371_2.fastq]]| The glob pattern must contain at least a star wildcard character. |

| Name | Description |

|---|---|

type |

Type of paths returned, either |

hidden |

When |

maxDepth |

Maximum number of directory levels to visit (default: |

followLinks |

When |

size |

Defines the number of files each emitted item is expected to hold (default: 2). Set to |

flat |

When |

checkIfExists |

When |

Exercise

Use the fromFilePairs method to create a channel emitting all pairs of fastq read in the data/ggal/

directory and print them.

Then use the flat:true option and compare the output with the previous execution.

3.2.7. fromSRA

The Channel.fromSRA method that makes it possible to query of NCBI SRA archive and returns a channel emitting the FASTQ files matching the specified selection criteria.

The query can be project ID or accession number(s) supported by the NCBI ESearch API. For example the following snippet:

Channel

.fromSRA('SRP043510')

.view()prints:

[SRR1448794, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR144/004/SRR1448794/SRR1448794.fastq.gz]

[SRR1448795, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR144/005/SRR1448795/SRR1448795.fastq.gz]

[SRR1448792, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR144/002/SRR1448792/SRR1448792.fastq.gz]

[SRR1448793, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR144/003/SRR1448793/SRR1448793.fastq.gz]

[SRR1910483, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR191/003/SRR1910483/SRR1910483.fastq.gz]

[SRR1910482, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR191/002/SRR1910482/SRR1910482.fastq.gz]

(remaining omitted)Multiple accession IDs can be specified using a list object:

ids = ['ERR908507', 'ERR908506', 'ERR908505']

Channel

.fromSRA(ids)

.view()Results in:

[ERR908507, [ftp://ftp.sra.ebi.ac.uk/vol1/fastq/ERR908/ERR908507/ERR908507_1.fastq.gz, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/ERR908/ERR908507/ERR908507_2.fastq.gz]]

[ERR908506, [ftp://ftp.sra.ebi.ac.uk/vol1/fastq/ERR908/ERR908506/ERR908506_1.fastq.gz, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/ERR908/ERR908506/ERR908506_2.fastq.gz]]

[ERR908505, [ftp://ftp.sra.ebi.ac.uk/vol1/fastq/ERR908/ERR908505/ERR908505_1.fastq.gz, ftp://ftp.sra.ebi.ac.uk/vol1/fastq/ERR908/ERR908505/ERR908505_2.fastq.gz]]| Read pairs are implicitly managed are returned as a list of files. |

It’s straightforward to use this channel as an input using the usual Nextflow syntax. For example:

params.accession = 'SRP043510'

reads = Channel.fromSRA(params.accession)

process fastqc {

input:

tuple sample_id, file(reads_file) from reads

output:

file("fastqc_${sample_id}_logs") into fastqc_ch

script:

"""

mkdir fastqc_${sample_id}_logs

fastqc -o fastqc_${sample_id}_logs -f fastq -q ${reads_file}

"""

}The code snippet above creates a channel containing 24 samples from a chromatin dynamics study and runs FASTQC on the resulting files.

4. Processes

A process is the basic Nextflow computing primitive to execute foreign function i.e. custom scripts or tools.

The process definition starts with keyword the process, followed by process name and finally the process body delimited by curly brackets. The process body must contain a string which represents the command or, more generally, a script that is executed by it.

A basic process looks like the following example:

process sayHello {

"""

echo 'Hello world!'

"""

}A process may contain five definition blocks, respectively: directives, inputs, outputs, when clause and finally the process script. The syntax is defined as follows:

process < name > {

[ directives ] (1)

input: (2)

< process inputs >

output: (3)

< process outputs >

when: (4)

< condition >

[script|shell|exec]: (5)

< user script to be executed >

}

| 1 | Zero, one or more process directives |

| 2 | Zero, one or more process inputs |

| 3 | Zero, one or more process outputs |

| 4 | An optional boolean conditional to trigger the process execution |

| 5 | The command to be executed |

4.1. Script

The script block is a string statement that defines the command that is executed by the process to carry out its task.

A process contains one and only one script block, and it must be the last statement when the process contains input and output declarations.

The script block can be a simple string or multi-line string. The latter simplifies the writing of non trivial scripts composed by multiple commands spanning over multiple lines. For example::

process example {

script:

"""

blastp -db /data/blast -query query.fa -outfmt 6 > blast_result

cat blast_result | head -n 10 | cut -f 2 > top_hits

blastdbcmd -db /data/blast -entry_batch top_hits > sequences

"""

}By default the process command is interpreted as a Bash script. However any other scripting language can be used just simply starting the script with the corresponding Shebang declaration. For example:

process pyStuff {

script:

"""

#!/usr/bin/env python

x = 'Hello'

y = 'world!'

print ("%s - %s" % (x,y))

"""

}| This allows the compositing in the same workflow script of tasks using different programming languages which may better fit a particular job. However for large chunks of code is suggested to save them into separate files and invoke them from the process script. |

4.1.1. Script parameters

Process script can be defined dynamically using variable values like in other string.

params.data = 'World'

process foo {

script:

"""

echo Hello $params.data

"""

}| A process script can contain any string format supported by the Groovy programming language. This allows us to use string interpolation or multiline string as in the script above. Refer to String interpolation for more information. |

Since Nextflow uses the same Bash syntax for variable substitutions in strings, Bash environment variables need to be escaped using \ character.

|

process foo {

script:

"""

echo "The current directory is \$PWD"

"""

}| Try to modify the above script using $PWD instead of \$PWD and check the difference. |

This can be tricky when the script uses many Bash variables. A possible alternative is to use a script string delimited by single-quote characters

process bar {

script:

'''

echo $PATH | tr : '\\n'

'''

}However this won’t allow any more the usage of Nextflow variables in the command script.

Another alternative is to use a shell statement instead of script which uses a different

the following syntax for Nextflow variables: !{..}. This allow to use both Nextflow and Bash variables in

the same script.

params.data = 'le monde'

process baz {

shell:

'''

X='Bonjour'

echo $X !{params.data}

'''

}4.1.2. Conditional script

The process script can also be defined in a complete dynamic manner using a if statement or any other expression evaluating to string value. For example:

params.aligner = 'kallisto'

process foo {

script:

if( params.aligner == 'kallisto' )

"""

kallisto --reads /some/data.fastq

"""

else if( params.aligner == 'salmon' )

"""

salmons --reads /some/data.fastq

"""

else

throw new IllegalArgumentException("Unknown aligner $params.aligner")

}Exercise

Write a custom function that given the aligner name as parameter returns the command string to be executed. Then use this function as the process script body.

4.2. Inputs

Nextflow processes are isolated from each other but can communicate between themselves sending values through channels.

Inputs implicitly determine the dependency and the parallel execution of the process. The process execution is fired each time a new data is ready to be consumed from the input channel:

The input block defines which channels the process is expecting to receive inputs data from. You can only define one input block at a time and it must contain one or more inputs declarations.

The input block follows the syntax shown below:

input:

<input qualifier> <input name> [from <source channel>]4.2.1. Input values

The val qualifier allows you to receive data of any type as input. It can be accessed in the process script by using the specified input name, as shown in the following example:

num = Channel.from( 1, 2, 3 )

process basicExample {

input:

val x from num

"""

echo process job $x

"""

}In the above example the process is executed three times, each time a value is received from the channel num and used to process the script. Thus, it results in an output similar to the one shown below:

process job 3

process job 1

process job 2| The channel guarantees that items are delivered in the same order as they have been sent - but - since the process is executed in a parallel manner, there is no guarantee that they are processed in the same order as they are received. |

4.2.2. Input files

The file qualifier allows the handling of file values in the process execution context. This means that

Nextflow will stage it in the process execution directory, and it can be access in the script by using the name specified in the input declaration.

reads = Channel.fromPath( 'data/ggal/*.fq' )

process foo {

input:

file 'sample.fastq' from reads

script:

"""

your_command --reads sample.fastq

"""

}The input file name can also be defined using a variable reference as shown below:

reads = Channel.fromPath( 'data/ggal/*.fq' )

process foo {

input:

file sample from reads

script:

"""

your_command --reads $sample

"""

}The same syntax it’s also able to handle more than one input file in the same execution. Only change the channel composition.

reads = Channel.fromPath( 'data/ggal/*.fq' )

process foo {

input:

file sample from reads.collect()

script:

"""

your_command --reads $sample

"""

}

When a process declares an input file the corresponding channel elements must be file objects i.e.

created with the file helper function from the file specific channel factories e.g. Channel.fromPath or Channel.fromFilePairs.

|

Consider the following snippet:

params.genome = 'data/ggal/transcriptome.fa'

process foo {

input:

file genome from params.genome

script:

"""

your_command --reads $genome

"""

}The above code creates a temporary file named input.1 with the string data/ggal/transcriptome.fa as content. That likely is not what you wanted to do.

4.2.3. Input path

As of version 19.10.0, Nextflow introduced a new path input qualifier that simplifies the handling of cases such as the one shown above.

In a nutshell the input path automatically handles string values as file objects. The following example works as expected:

params.genome = "$baseDir/data/ggal/transcriptome.fa"

process foo {

input:

path genome from params.genome

script:

"""

your_command --reads $genome

"""

}| The path qualifier should be preferred over file to handle process input files when using Nextflow 19.10.0 or later. |

Exercise

Write a script that creates a channel containing all read files matching the pattern data/ggal/*_1.fq followed by a process

that concatenates them into a single file and prints the first 20 lines.

4.2.4. Combine input channels

A key feature of processes is the ability to handle inputs from multiple channels. However it’s important to understands how the content of channel and their semantic affect the execution of a process.

Consider the following example:

process foo {

echo true

input:

val x from Channel.from(1,2,3)

val y from Channel.from('a','b','c')

script:

"""

echo $x and $y

"""

}Both channels emit three value, therefore the process is executed three times, each time with a different pair:

-

(1, a)

-

(2, b)

-

(3, c)

What is happening is that the process waits until there’s a complete input configuration i.e. it receives an input value from all the channels declared as input.

When this condition is verified, it consumes the input values coming from the respective channels, and spawns a task execution, then repeat the same logic until one or more channels have no more content.

This means channel values are consumed serially one after another and the first empty channel cause the process execution to stop even if there are other values in other channels.

What does it happen when not all channels have the same cardinality (i.e. they emit a different number of elements)?

For example:

process foo {

echo true

input:

val x from Channel.from(1,2)

val y from Channel.from('a','b','c','d')

script:

"""

echo $x and $y

"""

}In the above example the process is executed only two times, because when a channel has no more data to be processed it stops the process execution.

| Note however that value channel do not affect the process termination. |

To better understand this behavior compare the previous example with the following one:

process bar {

echo true

input:

val x from Channel.value(1)

val y from Channel.from('a','b','c')

script:

"""

echo $x and $y

"""

}Exercise

Write a process that is executed for each read file matching the pattern data/ggal/*_1.fa and

uses the same data/ggal/transcriptome.fa in each execution.

4.2.5. Input repeaters

The each qualifier allows you to repeat the execution of a process for each item in a collection, every time a new data is received. For example:

sequences = Channel.fromPath('data/prots/*.tfa')

methods = ['regular', 'expresso', 'psicoffee']

process alignSequences {

input:

file seq from sequences

each mode from methods

"""

t_coffee -in $seq -mode $mode

"""

}In the above example every time a file of sequences is received as input by the process, it executes three tasks running a T-coffee alignment with a different value for the mode parameter. This is useful when you need to repeat the same task for a given set of parameters.

Exercise

Extend the previous example so a task is executed for each read file matching the pattern data/ggal/*_1.fa and repeat the same task both with salmon and kallisto.

4.3. Outputs

The output declaration block allows to define the channels used by the process to send out the results produced.

There can be defined at most one output block and it can contain one or more outputs declarations. The output block follows the syntax shown below:

output: <output qualifier> <output name> [into <target channel>[,channel,..]]

4.3.1. Output values

The val qualifier allows to output a value defined in the script context. In a common usage scenario,

this is a value which has been defined in the input declaration block, as shown in the following example:

methods = ['prot','dna', 'rna']

process foo {

input:

val x from methods

output:

val x into receiver

"""

echo $x > file

"""

}

receiver.view { "Received: $it" }4.3.2. Output files

The file qualifier allows to output one or more files, produced by the process, over the specified channel.

process randomNum {

output:

file 'result.txt' into numbers

'''

echo $RANDOM > result.txt

'''

}

numbers.view { "Received: " + it.text }In the above example the process randomNum creates a file named result.txt containing a random number.

Since a file parameter using the same name is declared in the output block, when the task is completed that

file is sent over the numbers channel. A downstream process declaring the same channel as input will

be able to receive it.

4.3.3. Multiple output files

When an output file name contains a * or ? wildcard character it is interpreted as a

glob path matcher.

This allows to capture multiple files into a list object and output them as a sole emission. For example:

process splitLetters {

output:

file 'chunk_*' into letters

'''

printf 'Hola' | split -b 1 - chunk_

'''

}

letters

.flatMap()

.view { "File: ${it.name} => ${it.text}" }it prints:

File: chunk_aa => H File: chunk_ab => o File: chunk_ac => l File: chunk_ad => a

Some caveats on glob pattern behavior:

-

Input files are not included in the list of possible matches.

-

Glob pattern matches against both files and directories path.

-

When a two stars pattern

**is used to recourse across directories, only file paths are matched i.e. directories are not included in the result list.

Exercise

Remove the flatMap operator and see out the output change. The documentation

for the flatMap operator is available at this link.

4.3.4. Dynamic output file names

When an output file name needs to be expressed dynamically, it is possible to define it using a dynamic evaluated string which references values defined in the input declaration block or in the script global context. For example::

sample_ch = Channel.from ('human', 'mouse')

process foo {

input:

val x from sample_ch

output:

file "${x}.txt" into sample_files_ch

"""

touch ${x}.txt

"""

}

sample_files_ch.view()In the above example, each time the process is executed an alignment file is produced whose name depends

on the actual value of the x input.

4.3.5. Composite inputs and outputs

So far we have seen how to declare multiple input and output channels, but each channel was handling only one value at time. However Nextflow can handle tuple of values.

When using channel emitting tuple of values the corresponding input declaration must be declared with a set qualifier followed by definition of each single element in the tuple.

In the same manner output channel emitting tuple of values can be declared using the set qualifier

following by the definition of each tuple element in the tuple.

reads_ch = Channel.fromFilePairs('data/ggal/*_{1,2}.fq')

process foo {

input:

set val(sample_id), file(sample_files) from reads_ch

output:

set val(sample_id), file('sample.bam') into bam_ch

script:

"""

touch sample.bam

"""

}

bam_ch.view()

In previous versions of Nextflow tuple was called set but it was used exactly with the same semantic. It can still be used for backward compatibility.

|

Exercise

Modify the script of the previous exercise so that the bam file is named as the given sample_id.

4.4. When

The when declaration allows you to define a condition that must be verified in order to execute the process. This can be any expression that evaluates a boolean value.

It is useful to enable/disable the process execution depending the state of various inputs and parameters. For example:

params.dbtype = 'nr'

params.prot = 'data/prots/*.tfa'

proteins = Channel.fromPath(params.prot)

process find {

input:

file fasta from proteins

val type from params.dbtype

when:

fasta.name =~ /^BB11.*/ && type == 'nr'

script:

"""

blastp -query $fasta -db nr

"""

}4.5. Directives

Directive declarations allow the definition of optional settings that affect the execution of the current process without affecting the semantic of the task itself.

They must be entered at the top of the process body, before any other declaration blocks (i.e. input, output, etc).

Directives are commonly used to define the amount of computing resources to be used or other meta directives like that allows the definition of extra information for configuration or logging purpose. For example:

process foo {

cpus 2

memory 8.GB

container 'image/name'

script:

"""

your_command --this --that

"""

}The complete list of directives is available at this link.

Exercise

Modify the script of the previous exercise adding a tag directive logging the sample_id in the execution output.

4.6. Organise outputs

4.6.1. PublishDir directive

Nextflow manages independently workflow execution intermediate results from the pipeline expected outputs. Task output files are created in the task specific execution directory which is considered as a temporary directory that can be deleted upon completion.

The pipeline result files need to be marked explicitly using the directive publishDir in the process that’s creating such file. For example:

process makeBams {

publishDir "/some/directory/bam_files", mode: 'copy'

input:

file index from index_ch

tuple val(name), file(reads) from reads_ch

output:

tuple val(name), file ('*.bam') into star_aligned

"""

STAR --genomeDir $index --readFilesIn $reads

"""

}The above example will copy all bam files created by the star task in the directory path /some/directory/bam_files.

The publish directory can be local or remote. For example output files could be stored to a AWS S3 bucket just using the s3:// prefix in the target path.

|

You can use more then one publishDir to keep different outputs in separate directory. For example:

4.6.2. Manage semantic sub-directories

You can use more then one publishDir to keep different outputs in separate directory. For example:

params.reads = 'data/reads/*_{1,2}.fq.gz'

params.outdir = 'my-results'

Channel

.fromFilePairs(params.reads, flat: true)

.set{ samples_ch }

process foo {

publishDir "$params.outdir/$sampleId/", pattern: '*.fq'

publishDir "$params.outdir/$sampleId/counts", pattern: "*_counts.txt"

publishDir "$params.outdir/$sampleId/outlooks", pattern: '*_outlook.txt'

input:

set sampleId, file('sample1.fq.gz'), file('sample2.fq.gz') from samples_ch

output:

file "*"

script:

"""

< sample1.fq.gz zcat > sample1.fq

< sample2.fq.gz zcat > sample2.fq

awk '{s++}END{print s/4}' sample1.fq > sample1_counts.txt

awk '{s++}END{print s/4}' sample2.fq > sample2_counts.txt

head -n 50 sample1.fq > sample1_outlook.txt

head -n 50 sample2.fq > sample2_outlook.txt

"""

}The above example will create an output structure in the directory my-results, which contains a separate sub-directory for each given sample ID each of which contain the folders counts and outlooks.

5. Operators

-

Built-in functions applied to channels

-

Transform channels content

-

Can be used also to filter, fork and combine channels

5.1. Basic example

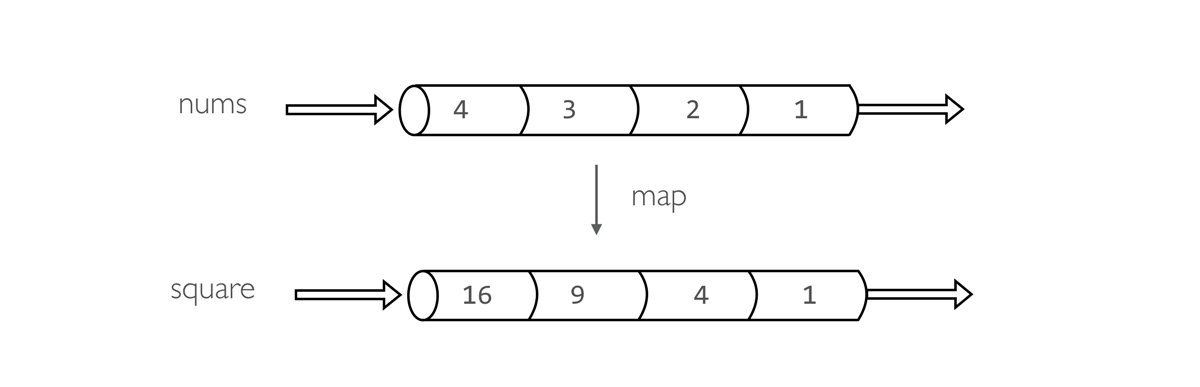

nums = Channel.from(1,2,3,4) (1)

square = nums.map { it -> it * it } (2)

square.view() (3)

| 1 | Create a queue channel emitting four values. |

| 2 | Create a new channels transforming each number in it’s square. |

| 3 | Print the channel content. |

Operators can be chained to implement custom behaviors:

Channel.from(1,2,3,4)

.map { it -> it * it }

.view()Operators can be separated in to five groups:

-

Filtering operators

-

Transforming operators

-

Splitting operators

-

Combining operators

-

Forking operators

-

Maths operators

5.2. Basic operators

5.2.1. view

The view operator prints the items emitted by a channel to the console standard output appending a

new line character to each of them. For example:

Channel

.from('foo', 'bar', 'baz')

.view()It prints:

foo

bar

bazAn optional closure parameter can be specified to customise how items are printed. For example:

Channel

.from('foo', 'bar', 'baz', 'qux')

.view { "- $it" }It prints:

- foo - bar - baz

5.2.2. map

The map operator applies a function of your choosing to every item emitted by a channel, and returns the items so obtained as a new channel. The function applied is called the mapping function and is expressed with a closure as shown in the example below:

Channel

.from( 'hello', 'world' )

.map { it -> it.reverse() }

.view()A map can associate to each element a generic tuple containing any data as needed.

Channel

.from( 'hello', 'world' )

.map { word -> [word, word.size()] }

.view { word, len -> "$word contains $len letters" }Exercise

Use fromPath to create a channel emitting the fastq files matching the pattern data/ggal/*.fq,

then chain with a map to return a pair containing the file name and the path itself.

Finally print the resulting channel.

Channel.fromPath('data/ggal/*.fq')

.map { file -> [ file.name, file ] }

.view { name, file -> "> file: $name" }5.2.3. into

The into operator connects a source channel to two or more target channels in such a way the values emitted by the source channel are copied to the target channels. For example:

Channel

.from( 'a', 'b', 'c' )

.into{ foo; bar }

foo.view{ "Foo emits: " + it }

bar.view{ "Bar emits: " + it }

Note the use in this example of curly brackets and the ; as channel names separator. This is needed because the actual parameter of into is a closure which defines the target channels to which the source one is connected.

|

5.2.4. mix

The mix operator combines the items emitted by two (or more) channels into a single channel.

c1 = Channel.from( 1,2,3 )

c2 = Channel.from( 'a','b' )

c3 = Channel.from( 'z' )

c1 .mix(c2,c3).view()1

2

a

3

b

z

The items in the resulting channel have the same order as in respective original channel,

however there’s no guarantee that the element of the second channel are append after the elements

of the first. Indeed in the above example the element a has been printed before 3.

|

5.2.5. flatten

The flatten operator transforms a channel in such a way that every tuple is flattened so that each single entry is emitted as a sole element by the resulting channel.

foo = [1,2,3]

bar = [4, 5, 6]

Channel

.from(foo, bar)

.flatten()

.view()The above snippet prints:

1

2

3

4

5

65.2.6. collect

The collect operator collects all the items emitted by a channel to a list and return the resulting object as a sole emission.

Channel

.from( 1, 2, 3, 4 )

.collect()

.view()It prints a single value:

[1,2,3,4]

The result of the collect operator is a value channel.

|

5.2.7. groupTuple

The groupTuple operator collects tuples (or lists) of values emitted by the source channel grouping together the elements that share the same key. Finally it emits a new tuple object for each distinct key collected.

Try the following example:

Channel

.from( [1,'A'], [1,'B'], [2,'C'], [3, 'B'], [1,'C'], [2, 'A'], [3, 'D'] )

.groupTuple()

.view()It shows:

[1, [A, B, C]]

[2, [C, A]]

[3, [B, D]]This operator is useful to process altogether all elements for which there’s a common property or a grouping key.

Exercise

Use fromPath to create a channel emitting the fastq files matching the pattern data/ggal/*.fq,

then use a map to associate to each file the name prefix. Finally group together all

files having the same common prefix.

5.2.8. join

The join operator creates a channel that joins together the items emitted by two channels for which exits a matching key. The key is defined, by default, as the first element in each item emitted.

left = Channel.from(['X', 1], ['Y', 2], ['Z', 3], ['P', 7])

right= Channel.from(['Z', 6], ['Y', 5], ['X', 4])

left.join(right).view()The resulting channel emits:

[Z, 3, 6]

[Y, 2, 5]

[X, 1, 4]5.2.9. branch

The branch operator allows you to forward the items emitted by a source channel to one or more output channels, choosing one out of them at a time.

The selection criteria is defined by specifying a closure that provides one or more boolean expression, each of which is identified by a unique label. On the first expression that evaluates to a true value, the current item is bound to a named channel as the label identifier. For example:

Channel

.from(1,2,3,40,50)

.branch {

small: it < 10

large: it > 10

}

.set { result }

result.small.view { "$it is small" }

result.large.view { "$it is large" }| The branch operator returns a multi-channel object i.e. a variable that holds more than one channel object. |

5.3. More resources

Check the operators documentation on Nextflow web site.

6. Groovy basic structures and idioms

Nextflow is a DSL implemented on top of the Groovy programming lang, which in turns is a super-set of the Java programming language. This means that Nextflow can run any Groovy and Java code.

6.1. Printing values

To print something is as easy as using one of the print or println methods.

println("Hello, World!")

The only difference between the two is that the println method implicitly appends a new line character to the printed string.

| parenthesis for function invocations are optional. Therefore also the following is a valid syntax. |

println "Hello, World!"

6.2. Comments

Comments use the same syntax as in the C-family programming languages:

// comment a single config file /* a comment spanning multiple lines */

6.3. Variables

To define a variable, simply assign a value to it:

x = 1 println x x = new java.util.Date() println x x = -3.1499392 println x x = false println x x = "Hi" println x

Local variables are defined using the def keyword:

def x = 'foo'

It should be always used when defining variables local to a function or a closure.

6.4. Lists

A List object can be defined by placing the list items in square brackets:

list = [10,20,30,40]

You can access a given item in the list with square-bracket notation (indexes start at 0) or using the get method:

assert list[0] == 10 assert list[0] == list.get(0)

In order to get the length of the list use the size method:

assert list.size() == 4

Lists can also be indexed with negative indexes and reversed ranges.

list = [0,1,2] assert list[-1] == 2 assert list[-1..0] == list.reverse()

List objects implements all methods provided by the Java java.util.List interface plus the extension methods provided by Groovy API.

assert [1,2,3] << 1 == [1,2,3,1]

assert [1,2,3] + [1] == [1,2,3,1]

assert [1,2,3,1] - [1] == [2,3]

assert [1,2,3] * 2 == [1,2,3,1,2,3]

assert [1,[2,3]].flatten() == [1,2,3]

assert [1,2,3].reverse() == [3,2,1]

assert [1,2,3].collect{ it+3 } == [4,5,6]

assert [1,2,3,1].unique().size() == 3

assert [1,2,3,1].count(1) == 2

assert [1,2,3,4].min() == 1

assert [1,2,3,4].max() == 4

assert [1,2,3,4].sum() == 10

assert [4,2,1,3].sort() == [1,2,3,4]

assert [4,2,1,3].find{it%2 == 0} == 4

assert [4,2,1,3].findAll{it%2 == 0} == [4,2]

6.5. Maps

Maps are like lists that have an arbitrary type of key instead of integer. Therefore, the syntax is very much aligned.

map = [a:0, b:1, c:2]

Maps can be accessed in a conventional square-bracket syntax or as if the key was a property of the map.

assert map['a'] == 0 (1)

assert map.b == 1 (2)

assert map.get('c') == 2 (3)

| 1 | Use of the square brackets. |

| 2 | Use a dot notation. |

| 3 | Use of get method. |

To add data or to modify a map, the syntax is similar to adding values to list:

map['a'] = 'x' (1)

map.b = 'y' (2)

map.put('c', 'z') (3)

assert map == [a:'x', b:'y', c:'z']

| 1 | Use of the square brackets. |

| 2 | Use a dot notation. |

| 3 | Use of get method. |

Maps objects implements all methods provided by the Java java.util.Map interface plus the extension methods provided by Groovy API.

6.6. String interpolation

String literals can be defined enclosing them either with single-quoted or double-quotes characters.

Double-quoted strings can contain the value of an arbitrary variable by prefixing its name with the $ character, or the value of

any expression by using the ${expression} syntax, similar to Bash/shell scripts:

foxtype = 'quick'

foxcolor = ['b', 'r', 'o', 'w', 'n']

println "The $foxtype ${foxcolor.join()} fox"

x = 'Hello'

println '$x + $y'

This code prints:

The quick brown fox $x + $y

Note the different use of $ and ${..} syntax to interpolate value expressions in a string literal.

|

Finally string literals can also be defined using the / character as delimiter. They are known as slashy

strings and are useful for defining regular expressions and patterns, as there is no need to escape backslashes.

As with double quote strings they allow to interpolate variables prefixed with a $ character.

Try the following to see the difference:

x = /tic\tac\toe/ y = 'tic\tac\toe' println x println y

it prints:

tic\tac\toe tic ac oe

6.7. Multi-line strings

A block of text that span multiple lines can be defined by delimiting it with triple single or double quotes:

text = """

Hello there James

how are you today?

"""

Finally multi-line strings can also be defined with slashy string. For example:

text = /

This is a multi-line

slashy string!

It's cool, isn't it?!

/

Like before, multi-line strings inside double quotes and slash characters support variable interpolation, while single-quoted multi-line strings do not.

6.8. If statement

The if statement uses the same syntax common other programming lang such Java, C, JavaScript, etc.

if( < boolean expression > ) {

// true branch

}

else {

// false branch

}

The else branch is optional. Also curly brackets are optional when the branch define just a single statement.

x = 1

if( x > 10 )

println 'Hello'

null, empty strings and empty collections are evaluated to false.

|

Therefore a statement like:

list = [1,2,3]

if( list != null && list.size() > 0 ) {

println list

}

else {

println 'The list is empty'

}

Can be written as:

if( list )

println list

else

println 'The list is empty'

See the Groovy-Truth for details.

In some cases can be useful to replace if statement with a ternary expression aka conditional expression. For example:

|

println list ? list : 'The list is empty'

The previous statement can be further simplified using the Elvis operator as shown below:

println list ?: 'The list is empty'

6.9. For statement

The classical for loop syntax is supported as shown here:

for (int i = 0; i <3; i++) {

println("Hello World $i")

}

Iteration over list objects is also possible using the syntax below:

list = ['a','b','c']

for( String elem : list ) {

println elem

}

6.10. Functions

It is possible to define a custom function into a script, as shown here:

int fib(int n) {

return n < 2 ? 1 : fib(n-1) + fib(n-2)

}

assert fib(10)==89

A function can take multiple arguments separating them with a comma. The return keyword can be omitted and the function implicitly returns the value of the last evaluated expression. Also explicit types can be omitted (thought not recommended):

def fact( n ) {

n > 1 ? n * fact(n-1) : 1

}

assert fact(5) == 120

6.11. Closures

Closures are the swiss army knife of Nextflow/Groovy programming. In a nutshell a closure is is a block of code that can be passed as an argument to a function, it could also be defined an anonymous function.

More formally, a closure allows the definition of functions as first class objects.

square = { it * it }

The curly brackets around the expression it * it tells the script interpreter to treat this expression as code.

The it identifier is an implicit variable that represents the value that is passed to the function when it is invoked.

Once compiled the function object is assigned to the variable square as any other variable assignments shown previously.

To invoke the closure execution use the special method call or just use the round parentheses to specify the closure parameter(s).

For example:

assert square.call(5) == 25 assert square(9) == 81

This is not very interesting until we find that we can pass the function square as an argument to other functions or methods.

Some built-in functions take a function like this as an argument. One example is the collect method on lists:

x = [ 1, 2, 3, 4 ].collect(square) println x

It prints:

[ 1, 4, 9, 16 ]

By default, closures take a single parameter called it, to give it a different name use the → syntax. For example:

square = { num -> num * num }

It’s also possible to define closures with multiple, custom-named parameters.

For example, the method each() when applied to a map can take a closure with two arguments, to which it passes the key-value

pair for each entry in the map object. For example:

printMap = { a, b -> println "$a with value $b" }

values = [ "Yue" : "Wu", "Mark" : "Williams", "Sudha" : "Kumari" ]

values.each(printMap)

It prints:

Yue with value Wu Mark with value Williams Sudha with value Kumari

A closure has two other important features. First, it can access and modify variables in the scope where it is defined.

Second, a closure can be defined in an anonymous manner, meaning that it is not given a name, and is defined in the place where it needs to be used.

As an example showing both these features, see the following code fragment:

result = 0 (1)

values = ["China": 1 , "India" : 2, "USA" : 3] (2)

values.keySet().each { result += values[it] } (3)

println result

| 1 | Define a global variable. |

| 2 | Define a map object. |

| 3 | Invoke the each method passing closure object which modifies the result variable. |

Learn more about closures in the Groovy documentation.

6.12. More resources

The complete Groovy language documentation is available at this link.

A great resource to master Apache Groovy syntax is Groovy in Action.

7. Simple Rna-Seq pipeline

During this tutorial you will implement a proof of concept of a RNA-Seq pipeline which:

-

Indexes a trascriptome file.

-

Performs quality controls

-

Performs quantification.

-

Create a MultiqQC report.

7.1. Define the pipeline parameters

The script script1.nf defines the pipeline input parameters.

params.reads = "$baseDir/data/ggal/*_{1,2}.fq"

params.transcriptome = "$baseDir/data/ggal/transcriptome.fa"

params.multiqc = "$baseDir/multiqc"

println "reads: $params.reads"Run it by using the following command:

nextflow run script1.nfTry to specify a different input parameter, for example:

nextflow run script1.nf --reads this/and/that7.1.1. Exercise

Modify the script1.nf adding a fourth parameter named outdir and set it to a default path

that will be used as the pipeline output directory.

7.1.2. Exercise

Modify the script1.nf to print all the pipeline parameters by using a single log.info command and a multiline string statement.

| see an example here. |

7.1.3. Recap

In this step you have learned:

-

How to define parameters in your pipeline script

-

How to pass parameters by using the command line

-

The use of

$varand${var}variable placeholders -

How to use multiline strings

-

How to use

log.infoto print information and save it in the log execution file

7.2. Create transcriptome index file

Nextflow allows the execution of any command or user script by using a process definition.

A process is defined by providing three main declarations: the process inputs, the process outputs and finally the command script.

The second example adds the index process.

/*

* pipeline input parameters

*/

params.reads = "$baseDir/data/ggal/*_{1,2}.fq"

params.transcriptome = "$baseDir/data/ggal/transcriptome.fa"

params.multiqc = "$baseDir/multiqc"

params.outdir = "results"

log.info """\

R N A S E Q - N F P I P E L I N E

===================================

transcriptome: ${params.transcriptome}

reads : ${params.reads}

outdir : ${params.outdir}

"""

.stripIndent()

/*

* define the `index` process that create a binary index

* given the transcriptome file

*/

process index {

input:

path transcriptome from params.transcriptome

output:

path 'index' into index_ch

script:

"""

salmon index --threads $task.cpus -t $transcriptome -i index

"""

}It takes the transcriptome file as input and creates the transcriptome index by using the salmon tool.

Note how the input declaration defines a transcriptome variable in the process context

that it is used in the command script to reference that file in the Salmon command line.

Try to run it by using the command:

nextflow run script2.nfThe execution will fail because Salmon is not installed in your environment.

Add the command line option -with-docker to launch the execution through a Docker container

as shown below:

nextflow run script2.nf -with-dockerThis time it works because it uses the Docker container nextflow/rnaseq-nf defined in the

nextflow.config file.

In order to avoid to add the option -with-docker add the following line in the nextflow.config file:

docker.enabled = true7.2.1. Exercise

Enable the Docker execution by default adding the above setting in the nextflow.config file.

7.2.2. Exercise

Print the output of the index_ch channel by using the view

operator.

7.2.3. Exercise

Use the command tree work to see how Nextflow organises the process work directory.

7.2.4. Recap

In this step you have learned:

-

How to define a process executing a custom command

-

How process inputs are declared

-

How process outputs are declared

-

How to access the number of available CPUs

-

How to print the content of a channel

7.3. Collect read files by pairs

This step shows how to match read files into pairs, so they can be mapped by Salmon.

Edit the script script3.nf and add the following statement as the last line:

read_pairs_ch.view()Save it and execute it with the following command:

nextflow run script3.nfIt will print an output similar to the one shown below:

[ggal_gut, [/.../data/ggal/gut_1.fq, /.../data/ggal/gut_2.fq]]

The above example shows how the read_pairs_ch channel emits tuples composed by

two elements, where the first is the read pair prefix and the second is a list

representing the actual files.

Try it again specifying different read files by using a glob pattern:

nextflow run script3.nf --reads 'data/ggal/*_{1,2}.fq'

File paths including one or more wildcards ie. *, ?, etc. MUST be

wrapped in single-quoted characters to avoid Bash expands the glob.

|

7.3.1. Exercise

Use the set operator in place

of = assignment to define the read_pairs_ch channel.

7.3.2. Exercise

Use the checkIfExists option from the fromFilePairs method

to check if the specified path contains at least a file pair.

7.3.3. Recap

In this step you have learned:

-

How to use

fromFilePairsto handle read pair files -

How to use the

setoperator to define a new channel variable -

How to use the

checkIfExistsoption to check input file existence

7.4. Perform expression quantification

The script script4.nf adds the quantification process.

In this script note as the index_ch channel, declared as output in the index process,

is now used as a channel in the input section.

Also note as the second input is declared as a tuple composed by two elements:

the pair_id and the reads in order to match the structure of the items emitted

by the read_pairs_ch channel.

Execute it by using the following command:

nextflow run script4.nf -resumeYou will see the execution of the quantification process.

The -resume option cause the execution of any step that has been already processed to be skipped.

Try to execute it with more read files as shown below:

nextflow run script4.nf -resume --reads 'data/ggal/*_{1,2}.fq'You will notice that the quantification process is executed more than

one time.

Nextflow parallelizes the execution of your pipeline simply by providing multiple input data to your script.

7.4.1. Exercise

Add a tag directive to the

quantification process to provide a more readable execution log.

7.4.2. Exercise

Add a publishDir directive

to the quantification process to store the process results into a directory of your choice.

7.4.3. Recap

In this step you have learned:

-

How to connect two processes by using the channel declarations

-

How to resume the script execution skipping already computed steps

-

How to use the

tagdirective to provide a more readable execution output -

How to use the

publishDirto store a process results in a path of your choice

7.5. Quality control

This step implements a quality control of your input reads. The inputs are the same

read pairs which are provided to the quantification steps

You can run it by using the following command:

nextflow run script5.nf -resumeThe script will report the following error message:

Channel `read_pairs_ch` has been used twice as an input by process `fastqc` and process `quantification`

7.5.1. Exercise

Modify the creation of the read_pairs_ch channel by using a into

operator in place of a set.

| see an example here. |

7.5.2. Recap

In this step you have learned:

-

How to use the

intooperator to create multiple copies of the same channel

7.6. MultiQC report

This step collect the outputs from the quantification and fastqc steps to create

a final report by using the MultiQC tool.

Execute the script with the following command:

nextflow run script6.nf -resume --reads 'data/ggal/*_{1,2}.fq'It creates the final report in the results folder in the current work directory.

In this script note the use of the mix

and collect operators chained

together to get all the outputs of the quantification and fastqc process as a single

input.

7.6.1. Recap

In this step you have learned:

-

How to collect many outputs to a single input with the

collectoperator -

How to

mixtwo channels in a single channel -

How to chain two or more operators together

7.7. Handle completion event

This step shows how to execute an action when the pipeline completes the execution.

Note that Nextflow processes define the execution of asynchronous tasks i.e. they are not executed one after another as they are written in the pipeline script as it would happen in a common imperative programming language.

The script uses the workflow.onComplete event handler to print a confirmation message

when the script completes.

workflow.onComplete {

println ( workflow.success ? "\nDone! Open the following report in your browser --> $params.outdir/multiqc_report.html\n" : "Oops .. something went wrong" )

}Try to run it by using the following command:

nextflow run script7.nf -resume --reads 'data/ggal/*_{1,2}.fq'7.8. Custom scripts

Real world pipelines use a lot of custom user scripts (BASH, R, Python, etc). Nextflow

allows you to use and manage all these scripts in consistent manner. Simply put them

in a directory named bin in the pipeline project root. They will be automatically added

to the pipeline execution PATH.

For example, create a file named fastqc.sh with the following content:

#!/bin/bash

set -e

set -u

sample_id=${1}

reads=${2}

mkdir fastqc_${sample_id}_logs

fastqc -o fastqc_${sample_id}_logs -f fastq -q ${reads}Save it, give execute permission and move it in the bin directory as shown below:

chmod +x fastqc.sh

mkdir -p bin

mv fastqc.sh binThen, open the script7.nf file and replace the fastqc process' script with

the following code:

script:

"""

fastqc.sh "$sample_id" "$reads"

"""Run it as before:

nextflow run script7.nf -resume --reads 'data/ggal/*_{1,2}.fq'7.8.1. Recap

In this step you have learned:

-

How to write or use existing custom script in your Nextflow pipeline.

-

How to avoid the use of absolute paths having your scripts in the

bin/project folder.

7.9. Metrics and reports

Nextflow is able to produce multiple reports and charts providing several runtime metrics and execution information.

Run the rnaseq-nf pipeline previously introduced as shown below:

nextflow run rnaseq-nf -with-docker -with-report -with-trace -with-timeline -with-dag dag.pngThe -with-report option enables the creation of the workflow execution report. Open the file report.html with a browser to see the report created with the above command.

The -with-trace option enables the create of a tab separated file containing runtime information for each executed task. Check the content of the file trace.txt for an example.

The -with-timeline option enables the creation of the workflow timeline report showing how processes where executed along time. This may

be useful to identify most time consuming tasks and bottlenecks. See an example at this link.

Finally the -with-dag option enables to rendering of the workflow execution direct acyclic graph representation.

Note: this feature requires the installation of Graphviz in your computer.

See here for details.

Note: runtime metrics may be incomplete for run short running tasks as in the case of this tutorial.

| You view the HTML files right-clicking on the file name in the left side-bar and choosing the Preview menu item. |

7.10. Mail notification

Send a notification email when the workflow execution complete using the -N <email address>

command line option. Execute again the previous example specifying your email address:

nextflow run script7.nf -resume --reads 'data/ggal/*_{1,2}.fq' -N <your email>

Your computer must have installed a pre-configured mail tool, such as mail or sendmail.

|

Alternatively you can provide the settings of the STMP server needed to send the mail notification in the Nextflow config file. See mail documentation for details.

7.11. Run a project from GitHub

Nextflow allows the execution of a pipeline project directly from a GitHub repository (or similar services eg. BitBucket and GitLab).

This simplifies the sharing and the deployment of complex projects and tracking changes in a consistent manner.

The following GitHub repository hosts a complete version of the workflow introduced in this tutorial:

You can run it by specifying the project name as shown below:

nextflow run nextflow-io/rnaseq-nf -with-dockerIt automatically downloads it and store in the $HOME/.nextflow folder.

Use the command info to show the project information, e.g.:

nextflow info nextflow-io/rnaseq-nfNextflow allows the execution of a specific revision of your project by using the -r command line option. For Example:

nextflow run nextflow-io/rnaseq-nf -r devRevision are defined by using Git tags or branches defined in the project repository.

This allows a precise control of the changes in your project files and dependencies over time.

7.12. More resources

-

Nextflow documentation - The Nextflow docs home.

-

Nextflow patterns - A collection of Nextflow implementation patterns.

-

CalliNGS-NF - A Variant calling pipeline implementing GATK best practices.

-

nf-core - A community collection of production ready genomic pipelines.

8. Manage dependencies & containers

Computational workflows rarely are composed by as single script or tool. Most of the times they require the usage of dozens of different software components or libraries.

Installing and maintaining such dependencies is a challenging task and the most common source of irreproducibility in scientific applications.

Containers are exceptionally useful in scientific workflows. They allow the encapsulation of software dependencies, i.e. tools and libraries required by a data analysis application in one or more self-contained, ready-to-run, immutable container images that can be easily deployed in any platform supporting the container runtime.

8.1. Docker hands-on

Get practice with basic Docker commands to pull, run and build your own containers.

A container is a ready-to-run Linux environment which can be executed in an isolated manner from the hosting system. It has own copy of the file system, processes space, memory management, etc.

Containers are a Linux feature known as Control Groups or Cgroups introduced with kernel 2.6.

Docker adds to this concept an handy management tool to build, run and share container images.

These images can be uploaded and published in a centralised repository know as Docker Hub, or hosted by other parties like for example Quay.

8.1.1. Run a container

Run a container is easy as using the following command:

docker run <container-name>For example:

docker run hello-world8.1.2. Pull a container

The pull command allows you to download a Docker image without running it. For example:

docker pull debian:stretch-slimThe above command download a Debian Linux image.

8.1.3. Run a container in interactive mode

Launching a BASH shell in the container allows you to operate in an interactive mode in the containerised operating system. For example:

docker run -it debian:jessie-slim bashOnce launched the container you wil noticed that’s running as root (!). Use the usual commands to navigate in the file system.

To exit from the container, stop the BASH session with the exit command.

8.1.4. Your first Dockerfile

Docker images are created by using a so called Dockerfile i.e. a simple text file

containing a list of commands to be executed to assemble and configure the image

with the software packages required.

In this step you will create a Docker image containing the Salmon tool.

Warning: the Docker build process automatically copies all files that are located in the

current directory to the Docker daemon in order to create the image. This can take

a lot of time when big/many files exist. For this reason it’s important to always work in

a directory containing only the files you really need to include in your Docker image.

Alternatively you can use the .dockerignore file to select the path to exclude from the build.

Then use your favourite editor eg. vim to create a file named Dockerfile and copy the

following content:

FROM debian:jessie-slim

MAINTAINER <your name>

RUN apt-get update && apt-get install -y curl cowsay

ENV PATH=$PATH:/usr/games/When done save the file.

8.1.5. Build the image

Build the Docker image by using the following command:

docker build -t my-image .Note: don’t miss the dot in the above command. When it completes, verify that the image has been created listing all available images:

docker imagesYou can try your new container by running this command:

docker run my-image cowsay Hello Docker!8.1.6. Add a software package to the image

Add the Salmon package to the Docker image by adding to the Dockerfile the following snippet:

RUN curl -sSL https://github.com/COMBINE-lab/salmon/releases/download/v0.8.2/Salmon-0.8.2_linux_x86_64.tar.gz | tar xz \

&& mv /Salmon-*/bin/* /usr/bin/ \

&& mv /Salmon-*/lib/* /usr/lib/Save the file and build again the image with the same command as before:

docker build -t my-image .You will notice that it creates a new Docker image with the same name but with a different image ID.

8.1.7. Run Salmon in the container

Check that everything is fine running Salmon in the container as shown below:

docker run my-image salmon --versionYou can even launch a container in an interactive mode by using the following command:

docker run -it my-image bashUse the exit command to terminate the interactive session.

8.1.8. File system mounts

Create an genome index file by running Salmon in the container.

Try to run Salmon in the container with the following command:

docker run my-image \

salmon index -t $PWD/data/ggal/transcriptome.fa -i indexThe above command fails because Salmon cannot access the input file.

This happens because the container runs in a complete separate file system and it cannot access the hosting file system by default.

You will need to use the --volume command line option to mount the input file(s) eg.

docker run --volume $PWD/data/ggal/transcriptome.fa:/transcriptome.fa my-image \

salmon index -t /transcriptome.fa -i index

the generated transcript-index directory is still not accessible in the host file system (and actually it went lost).

|

| An easier way is to mount a parent directory to an identical one in the container, this allows you to use the same path when running it in the container eg. |

docker run --volume $HOME:$HOME --workdir $PWD my-image \

salmon index -t $PWD/data/ggal/transcriptome.fa -i indexCheck the content of the transcript-index folder entering the command:

| Note that the permissions for files created by the Docker execution is root. |

Exercise

Use the option -u $(id -u):$(id -g) to allow Docker to create files with the right permission.

8.1.9. Upload the container in the Docker Hub (bonus)

Publish your container in the Docker Hub to share it with other people.

Create an account in the hub.docker.com web site. Then from your shell terminal run the following command, entering the user name and password you specified registering in the Hub:

docker loginTag the image with your Docker user name account:

docker tag my-image <user-name>/my-imageFinally push it to the Docker Hub:

docker push <user-name>/my-imageAfter that anyone will be able to download it by using the command:

docker pull <user-name>/my-imageNote how after a pull and push operation, Docker prints the container digest number e.g.

Digest: sha256:aeacbd7ea1154f263cda972a96920fb228b2033544c2641476350b9317dab266

Status: Downloaded newer image for nextflow/rnaseq-nf:latestThis is a unique and immutable identifier that can be used to reference container image in a univocally manner. For example:

docker pull nextflow/rnaseq-nf@sha256:aeacbd7ea1154f263cda972a96920fb228b2033544c2641476350b9317dab2668.1.10. Run a Nextflow script using a Docker container

The simplest way to run a Nextflow script with a Docker image is using the -with-docker command line option:

nextflow run script2.nf -with-docker my-imageWe’ll see later how to configure in the Nextflow config file which container to use instead of having to specify every time as a command line argument.

8.2. Singularity

Singularity is container runtime designed to work in HPC data center, where the usage of Docker is generally not allowed due to security constraints.

Singularity implements a container execution model similarly to Docker however it uses a complete different implementation design.

A Singularity container image is archived as a plain file that can be stored in a shared file system and accessed by many computing nodes managed by a batch scheduler.

8.2.1. Create a Singularity image

Singularity images are created using a Singularity file in similar manner to Docker, though using a different syntax.

Bootstrap: docker

From: debian:stretch-slim

%environment

export PATH=$PATH:/usr/games/

%labels

AUTHOR <your name>

%post

apt-get update && apt-get install -y locales-all curl cowsay

curl -sSL https://github.com/COMBINE-lab/salmon/releases/download/v1.0.0/salmon-1.0.0_linux_x86_64.tar.gz | tar xz \

&& mv /salmon-*/bin/* /usr/bin/ \

&& mv /salmon-*/lib/* /usr/lib/Once you have save the Singularity file. Create the image with these commands:

sudo singularity build my-image.sif SingularityNote: the build command requires sudo permissions. A common workaround consists to build the image on a local

workstation and then deploy in the cluster just copying the image file.

8.2.2. Running a container

Once done, you can run your container with the following command

singularity exec my-image.sif cowsay 'Hello Singularity'By using the shell command you can enter in the container in interactive mode. For example:

singularity shell my-image.sifOnce in the container instance run the following commands:

touch hello.txt

ls -la

Note how the files on the host environment are shown. Singularity automatically mounts the host $HOME directory and uses the current work directory.

|

8.2.3. Import a Docker image

An easier way to create Singularity container without requiring sudo permission and boosting the containers interoperability is to import a Docker container image pulling it directly from a Docker registry. For example: